Continued from:

2. Algorithms

For evolution is just an algorithm.

It’s a repeatable set of rules. In this case, the generation of life-forms is repeatable, and the rule is that famous one Darwin gave us; the fittest survive and reproduce, and the weakest, do not.

Even to those that accept it, this can seem too simple a conceptual foundation for all of life’s mysteries, but it’s worth remembering that algorithms are deceptively complex to fully understand. From simple beginnings, we still have many unsolved problems.

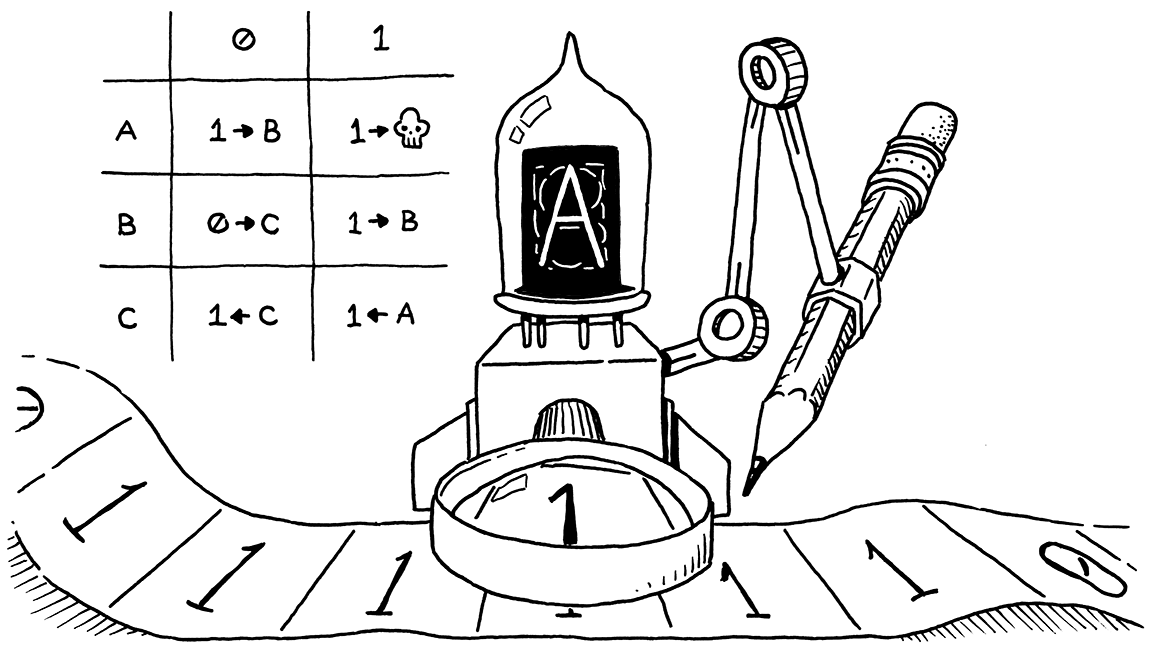

The concept of an algorithm was only formally defined in the 20th century. Alan Turing and his Turing Machines are considered the canonical representation of algorithms. Turing showed that the simplest machine one can imagine (a tape, and a head to read/write from it, according to a table of rules) was enough to calculate any mathematical function.

Turing’s machines were the first models of computers, before computers had physically been made.

Turing had a supervisor, Alonzo Church, who was also working to define algorithms, albeit through a different means. Church invented a formalism called the ‘Lambda Calculus’ (In this case the word ‘Calculus’ just means a formal method). The lambda calculus is just a set of rules for rewriting strings of symbols, by substituting one symbol for others. Church invented what was the first programming language, before a machine existed to execute it. This language is so simple, it could be described with 3 simple rules.

Together it was shown that Turing’s Machines and Church’s Lambda Calculus were exactly equivalent in what they were capable of computing. This was not a coincidence.

When mathematicians analyse concepts like ‘functions’ in computability theory, they are less interested in how fast something can be computed, and more interested in whether something can be computed at all.

As more formalisms were developed, it was always shown that none was more powerful than a Turing machine, in what it could compute.

Even if they could compute something faster than the simple tape and head, the plucky Turing Machine will eventually get there, and reach the same conclusion.

In fact, a Turing Machine can ‘simulate’ any other Turing Machine, and indeed any machine capable of computing as we know it.

For coders and computer scientists

Skip ahead otherwise

Turing Machines are models of imperative programming, and Lambda Calculus models functional programming.

There are numerous variants of Turing Machines and Lambda Calculus that depart or build on from Turing and Church’s original work.

They have occupied particular niches in computer science. Turing Machines are useful in computational complexity theory, whereas Lambda calculi - with their close relationship to functional programming and type theory, find a useful place in computer formulations of mathematical proofs.

Church originally intended for the lambda calculus as an investigation into the foundation of mathematics. It was only intended as a formalism, much like Russell’s Principia, not to be used by working mathematicians. However, as Lambda Calculus and Type theory heavily influenced the developmend of Lisp and ML languages, the latter of which are used in automated theorem provers. Church’s orginal goals are being realised, perhaps in a different form to which Church intended.

Modern mathematical proofs are so long and complicated (running into hundreds of pages), they are effectively impossible to be checked by human minds, and be confident in their correctness. Particularly given that proofs build on one another in a dependency hierarchy, an incorrect assumption in one could bring the whole edifice crashing down like a stack of cards.

In the 21st century, through Lambda Calculus-inspired languages, the minds of machines must check our mathematical proofs, and consequently, the foundationas of mathematics.